⚠️ SNARKY DISCLAIMER

This is satire. It's hot. It's legal. It’s paranoid with purpose. If you're a senator with fragile optics or a platform CEO trying to look “kid-friendly” while selling ad data to Beijing, this one's not for you. But hey, if you love the smell of pre-crime censorship in the morning, buckle up.

Ah, Senate Bill 1748—the Kids Online Safety Act. You hear that title and think, “Finally, they’re gonna stop the algorithm from turning my sixth grader into an Andrew Tate cosplayer.”

But look under the hood, folks. That’s not a child safety bill.

That’s Project 2025 with a juice box.

Because behind the cute title and bipartisan virtue signaling is a bill that’s basically Handmaid’s Tale meets Comcast Terms of Service.

Let’s break it down:

This bill wants platforms to “prevent harm” to minors. Sounds noble, right? But how do you define harm? Offensive content? Excessive screen time? Satire? Politics? Climate change? Queer joy?

Here's what it actually means:

Platforms will be forced to build AI‑driven content policing regimes that scan, flag, and purge anything that might hurt a theoretical child somewhere in the Kansas panhandle.

Not just TikTok. Not just porn.

Everything.

Your meme? Flagged.

Your post about reproductive rights? Age-gated.

Your satire? Canceled by a bot that thinks “Nixon with goat horns” is demonic grooming.

It’s “Won’t someone think of the children?” meets “This post violates community guidelines.”

🧠 Privacy? What Privacy?

This bill doesn’t just censor content—it opens the floodgates for state-sanctioned surveillance.

Because to enforce this, platforms will need age verification. And not your old “click if you’re 18” checkbox. Oh no.

We’re talking biometric scans. Digital IDs. Verified behavior profiles.

The same government that can’t fix the DMV now wants a centralized child-safety scoring algorithm on every website.

And who runs this database of age-verified, morality-tagged, childproofed citizens?

Well, under Project 2025, probably someone from Liberty University with a cross in one hand and a TikTok ban in the other.

👁️ Dystopia Speedrun:

If passed and enforced, S. 1748 leads directly to:

- National Online ID Systems: You want to watch YouTube? First scan your face.

- Platform Pre-Censorship: AI removes your post before it even goes live.

- Creators Blacklisted by Risk Scores: “Sorry, you made a joke about Jesus and meatballs. You’re now labeled PG-13 offensive.”

- Federal “Trusted Content Partners”: Fox News is rated safe. John Oliver? Restricted.

- Algorithmic Indoctrination: Every timeline scrubbed to reflect “safe” values. Who defines those? Well, you didn’t vote for them.

And here’s the kicker:

The people passing this are the same ones who scream about “cancel culture” and “woke censorship.”

They don’t hate censorship—they just want exclusive broadcast rights.

🔮 Project 2025 Fallout:

Pair this with the broader goals of Project 2025—where the Executive becomes judge, jury, and content curator—and suddenly your child’s “safe online experience” comes preloaded with:

- Prayer algorithms.

- Abstinence filters.

- History books without civil rights.

- Gender? Removed for your protection.

This bill is the digital trigger for ideological sterilization. Not safety.

🎭 And Let’s Talk About Creators:

Independent voices? Done.

Satirists? Shadowbanned.

Political artists? Monetization denied.

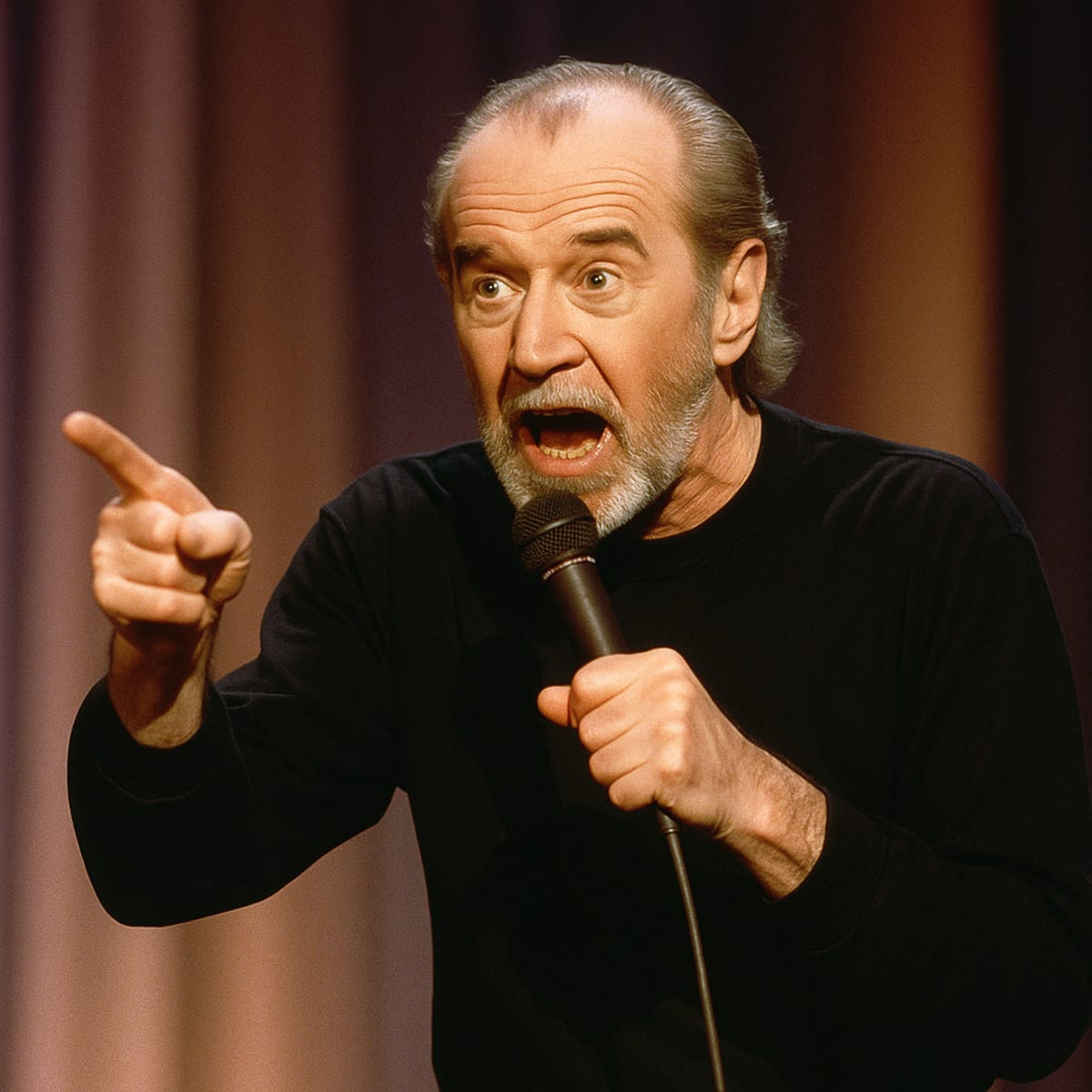

Comedians? Age-restricted faster than you can say “George Carlin.”

The internet was the last semi-free public square—and this bill gives it back to the hall monitors.

🧨 FINAL THOUGHT

Senate Bill 1748 doesn’t protect kids.

It doesn’t protect families.

It protects power.

It’s a velvet-gloved content gulag, and they’re selling it with a cartoon smile and a downloadable parent portal.

Because when fascism finally comes for your feed, it’ll be branded, family-friendly, and Terms-of-Service compliant.

They don’t want to stop the bad guys.

They want to own the narrative.

And the best way to do that?

Slap a filter on the future and call it safety.