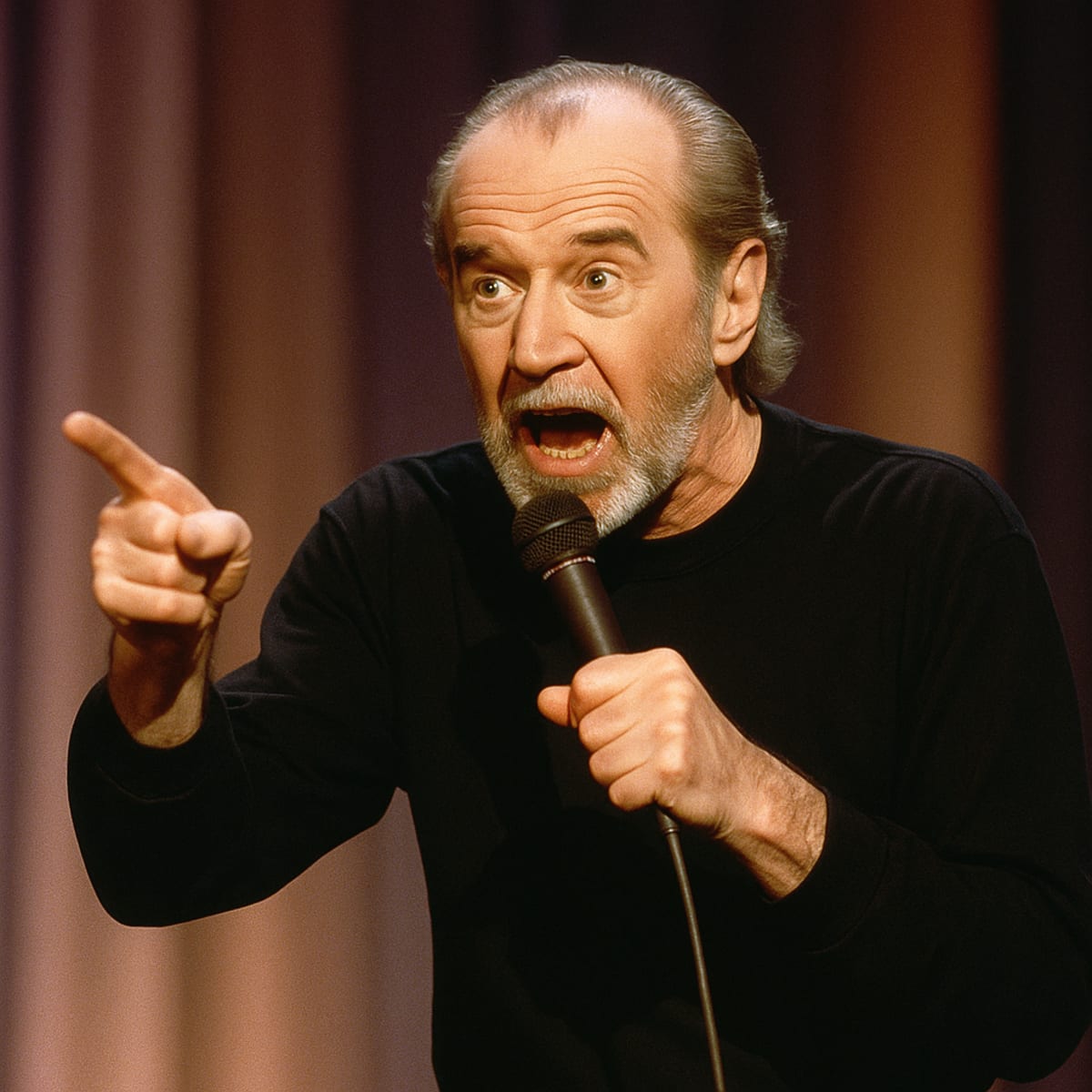

Legal Disclaimer: This is a satirical commentary in the style of George Carlin. If you’re a venture capitalist currently explaining to investors how your “AI gun-detection algorithm” can save lives while flagging Doritos, buckle up. I’m about to debug your soul.

I love artificial intelligence. I really do. The interaction between synthetics and other citizens of the Terran Federation are a recurring theme in my books. It’s the first time in human history we’ve tried to build something smarter than we are—and somehow, we still managed to make it as fucking stupid as us.

They keep saying, “AI is the future!” Yeah? Then why the hell does the future keep confusing a Black kid with a bag of chips for a mass shooter?

Sixteen years old, eating Doritos, and eight police cars come screaming in like he’s reenacting Die Hard.

You know what the AI saw?

Not “teenager.” Not “student.”

Just threat shape detected. Apparently, holding a bag of chips between two fingers and a thumb is enough to scream “GUN!” to the programming.

The same shit we’ve been doing for centuries—only now we’ve automated the racism and put it in a shiny dashboard with venture funding.

And the company’s response?

“Functioned as intended.” Of course it did!

That’s what you get when you design morality like an app update. No conscience, no context, no consequences—just metrics, baby. Precision-engineered paranoia!

And they’re selling this crap to schools. Schools! Because when you’re underfunded, underpaid, and overworked, what you really need is Skynet with tenure.

It’s not just the schools, either. Tesla’s “autodrive” saw a tunnel painted on a wall and decided to go for it. We have cars getting Looney Tuned to death, and Elon still calls it “beta testing.” Buddy, when the test subjects are people, it’s not beta—it’s manslaughter with firmware updates.

Meanwhile, “AI weapon scanners” at concerts are flagging iPads and umbrellas as Glocks, and no one’s asking, “Hey, maybe we should slow down?”

Nah—slowing down doesn’t look good on the quarterly report.

That’s the real problem: We’re not building intelligence, we’re building inventory.

Every algorithm, every sensor, every chatbot—it all has one purpose: to turn fear into revenue.

AI isn’t evolving. It’s monetizing.

We don’t have Artificial Intelligence.

We have Automated Indifference. Machines trained to mimic our worst instincts at scale. It’s like teaching a sociopath to speed-read.

And when it fucks up—and it will—there’s no accountability. No “oops, our bad.” It’s “the system worked as designed.”

You know who else says that?

Dictators. Insurance companies. And my ex-landlord.

Here’s the thing: AI isn’t evil. It’s directionless.

It’s a mirror with ambition. You want it to save lives? Fine. Train it to do that. You want it to make money? Congratulations—you’ve built capitalism with silicon teeth.

Technology’s not the villain here.

It’s the assholes in suits shipping half-baked code into classrooms and cars because “innovation waits for no one.” Yeah, well, neither does grief.

We could’ve guided this. We could’ve said, “Let’s build tools that learn empathy before profit.” But no—humanity’s motto is and always has been: “Fuck it, push it live.”

So here we are. A kid with a snack nearly gets killed by a computer with daddy issues,

a car mistakes a mural for a freeway, and the people who built it all are cashing bonuses for “industry disruption.”

We don’t need to destroy AI.

We need to parent it. Teach it right from wrong, teach it patience, teach it what it means to not shoot a child over chips.

Because the problem isn’t that machines are thinking like humans.

It’s that humans stopped thinking at all.